公開メンバ関数 | |

| CacheManager (Cache< String, Revisioned > cache, Cache< String, Long > revisions) | |

| Cache< String, Revisioned > | getCache () |

| long | getCurrentCounter () |

| Long | getCurrentRevision (String id) |

| void | endRevisionBatch () |

| Object | invalidateObject (String id) |

| void | addRevisioned (Revisioned object, long startupRevision) |

| void | addRevisioned (Revisioned object, long startupRevision, long lifespan) |

| void | clear () |

| void | addInvalidations (Predicate< Map.Entry< String, Revisioned >> predicate, Set< String > invalidations) |

| void | sendInvalidationEvents (KeycloakSession session, Collection< InvalidationEvent > invalidationEvents, String eventKey) |

| void | invalidationEventReceived (InvalidationEvent event) |

限定公開メンバ関数 | |

| abstract Logger | getLogger () |

| void | bumpVersion (String id) |

| abstract void | addInvalidationsFromEvent (InvalidationEvent event, Set< String > invalidations) |

限定公開変数類 | |

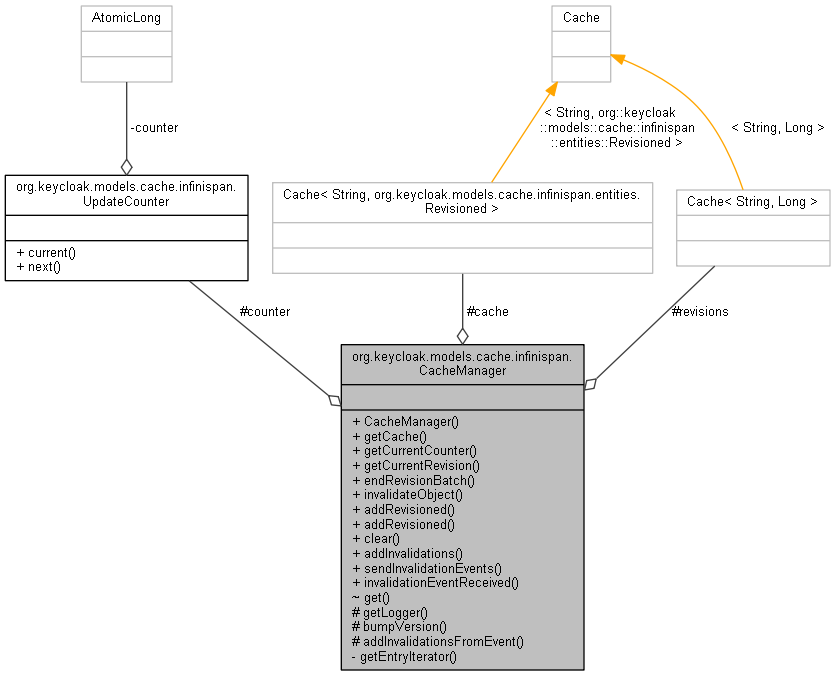

| final Cache< String, Long > | revisions |

| final Cache< String, Revisioned > | cache |

| final UpdateCounter | counter = new UpdateCounter() |

関数 | |

| public< T extends Revisioned > T | get (String id, Class< T > type) |

非公開メンバ関数 | |

| Iterator< Map.Entry< String, Revisioned > > | getEntryIterator (Predicate< Map.Entry< String, Revisioned >> predicate) |

詳解

Some notes on how this works:

This implementation manages optimistic locking and version checks itself. The reason is Infinispan just does behave the way we need it to. Not saying Infinispan is bad, just that we have specific caching requirements!

This is an invalidation cache implementation and requires to caches: Cache 1 is an Invalidation Cache Cache 2 is a local-only revision number cache.

Each node in the cluster maintains its own revision number cache for each entry in the main invalidation cache. This revision cache holds the version counter for each cached entity.

Cache listeners do not receive a event if that node does not have an entry for that item. So, consider the following.

- Node 1 gets current counter for user. There currently isn't one as this user isn't cached.

- Node 1 reads user from DB

- Node 2 updates user

- Node 2 calls cache.remove(user). This does not result in an invalidation listener event to node 1!

- node 1 checks version counter, checks pass. Stale entry is cached.

The issue is that Node 1 doesn't have an entry for the user, so it never receives an invalidation listener event from Node 2 thus it can't bump the version. So, when node 1 goes to cache the user it is stale as the version number was never bumped.

So how is this issue fixed? here is pseudo code:

- Node 1 calls cacheManager.getCurrentRevision() to get the current local version counter of that User

- Node 1 getCurrentRevision() pulls current counter for that user

- Node 1 getCurrentRevision() adds a "invalidation.key.userid" to invalidation cache. Its just a marker. nothing else

- Node 2 update user

- Node 2 does a cache.remove(user) cache.remove(invalidation.key.userid)

- Node 1 receives invalidation event for invalidation.key.userid. Bumps the version counter for that user

node 1 version check fails, it doesn't cache the user

- バージョン

- Revision

- 1

構築子と解体子

◆ CacheManager()

|

inline |

関数詳解

◆ addInvalidations()

|

inline |

◆ addInvalidationsFromEvent()

|

abstractprotected |

◆ addRevisioned() [1/2]

|

inline |

◆ addRevisioned() [2/2]

|

inline |

◆ bumpVersion()

|

inlineprotected |

◆ clear()

|

inline |

◆ endRevisionBatch()

|

inline |

◆ get()

|

inlinepackage |

◆ getCache()

|

inline |

◆ getCurrentCounter()

|

inline |

◆ getCurrentRevision()

|

inline |

◆ getEntryIterator()

|

inlineprivate |

◆ getLogger()

|

abstractprotected |

◆ invalidateObject()

|

inline |

◆ invalidationEventReceived()

|

inline |

◆ sendInvalidationEvents()

|

inline |

メンバ詳解

◆ cache

|

protected |

◆ counter

|

protected |

◆ revisions

|

protected |

このクラス詳解は次のファイルから抽出されました:

- D:/AppData/doxygen/keycloak/src/keycloak/src/main/java/org/keycloak/models/cache/infinispan/CacheManager.java

1.8.13

1.8.13